Temperature

The measurement of temperature is a frequent requirement in many test engineering applications. This post will discuss the concept of temperature and the various techniques used to measure it.

Scientifically, temperature is a measure of the average kinetic energy of particles in matter. Colloquially, it refers to how hot or cold something feels.

Temperature is often associated with heat, but it is important to note that they are distinct concepts. Heat is a form of energy that flows from a higher temperature to a lower temperature, similar to how current flows from a high to a voltage in an electrical circuit. Temperature serves as a measure of the “degree” of hotness or coldness, similar to how voltage serves as a measure of electric potential.

Scales

Temperature is most often measured in degrees Fahrenheit, Celsius, or Kelvin.

Fahrenheit and Celsius are relative scales — they are relative to the freezing and boiling point of water. On the Fahrenheit scale 32 is the freezing point of water, and 212 °F is the boiling point. On the Celsius scale this is 0 °C and 100 °C respectively. These numbers are arbitrary. These scales are intuitive since ice and steam are easily distinguishable everyday states of matter and the temperature difference between these states is readily apparent and intuitive.

Kelvin is the absolute scale, and 0 Kelvin corresponds to “absolute zero” a state of matter where atomic motion stops completely. 0 K was calculated to be -273.15 °C based on Charles’ law. Conversely 0 °C is 273 K, and this provides an intuition on interpreting temperatures expressed in Kelvin. A room temperature of 25 °C is 298 K.

Why care about measuring temperature?

Because it affects material properties and rates of reaction.

- The pressure and volume of a gas are directly correlated with temperature. As a gas is heated it will expand, and its pressure will increase.

- Electrical resistance increases with temperature.

- Rates of reaction increase with temperature.

- Material properties such as ductility, malleability, and strength are affected by temperature.

- Knowing the initial/current temperature of a system enables calculation of the amount of energy that could be absorbed/released by the system when its temperature changes.

Therefore, accurate temperature measurements are essential in many process control applications. Examples: Heating/cooling, cooking, brewing, chemical plants, refineries, engine control, and the list goes on.

Types of temperature sensors

Thermocouple

A thermocouple consists of two wires of different metals electrically bonded at two points. One end of the thermocouple is kept at a known reference temperature (cold junction), and the other end is used as the measurement probe (hot junction). Thermocouples are contact based instruments. The hot junction must be in physical contact with the object for accurate measurements.

Theory

Thermocouples operate on the thermoelectric (Seebeck) effect. If there exists a temperature difference between the two ends of the thermocouple an electric voltage is generated across the dissimilar metals.

A thermocouple does not measure absolute temperature. It measures a temperature difference between the hot and cold junction. Thermocouple tables which correlate thermoelectric voltages to measured hot junction temperature are published based on the cold junction being in an ice bath at 0°C.

Carrying around a bucket of ice does not make for a practical test instrument. Therefore, modern thermocouple readers use electronic cold junction compensation (CJC) circuits which use an absolute temperature sensor to measure the temperature of the reference junction. This is then added to the measurement to compensate for the cold junction not being at 0°C.

Thermocouples generate thermoelectric voltage. Therefore unlike most sensors a thermocouple wire requires no applied power! As voltage output devices thermocouples are easy to use with a DMM/DAQ.

Performance

Thermocouples are millivolt output devices with sensitivities in the range of microvolts per degree C. Therefore they are extremely susceptible to coupled electrical noise.

Thermocouples aren’t the most accurate devices. The temperature reading could have an error of up to ±2.2°C. This may not be a problem when measuring temperature that is several hundred degrees but is not acceptable for applications such as measuring room temperature. Thermocouples are generally used to measure very high temperatures such as in ovens and furnaces. Type K thermocouples can measure temperatures as high as 1200°C.

RTD

RTD stands for Resistor Temperature Detector. The RTD converts a change in resistance of a pure metal (e.g. platinum) to a change in temperature. The RTD is a passive instrument, it is essentially a fixed value resistor. The most common type of industrial RTD is the PT100, which uses a platinum (PT) sensing element that has a resistance of 100 ohm at 0°C. The relationship between resistance and temperature of the PT100 is shown in the chart below.

RTDs cannot be used directly with voltage input DAQs since DAQs do not have ohmmeter functionality, a signal conditioning module with voltage output is required. RTD usage guide.

Performance

The class A PT100 has an accuracy of ±(0.15 + 0.002*t)°C. Note that the accuracy is dependent on the temperature being measured, and worsens with increasing temperature. However it is still better than a thermocouple throughout its range.

Since RTDs are typically used with signal conditioning modules coupled noise is not a major concern. However RTD measurements are subject to error due to the self-heating phenomenon associated with resistors. Since RTD’s like the PT100 have a low resistance value the current flowing through the RTD will generate enough heat to cause the RTD’s internal temperature to rise above the temperature of the object being measured, which adds error to the measurement.

Thermistor

A thermistor is similar to a RTD in that the sensing element is a resistive element, and the measurement principle is based on detecting a change in resistance in response to a change in temperature. Unlike an RTD the thermistor is made of semiconductor material and is highly non-linear, as seen in the chart below.

Thermistors have a resistance in the kilo- and mega-ohm range. Due to its high resistance a thermistor can be used in a voltage divider circuit and the voltage across the thermistor can be measured by a DAQ. Thermistors do not suffer from the self-heating problems that exists in RTDs due to large resistance values and low currents.

There are two types of thermistors: Negative temperature coefficient (NTC) and Positive temperature coefficient (PTC). The resistance of NTC thermistors decreases as temperature increases (as shown in the image above), whereas the resistance of PTC thermistors increases with increasing temperature.

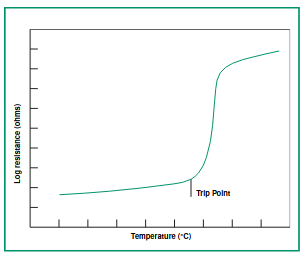

PTCs can be used as thermal protection devices (resettable fuse). In a PTC designed for use as a resettable fuse the resistance increases from very low to very high past the designed trip point.

Unlike a one time fuse, a PTC will not interrupt current flow completely. A level of leakage current will remain even after the PTC has tripped.

Performance

Since thermistors are non-linear using them is not straightforward. A thermistor requires calibration at multiple points rather than a simple two point calibration. However they are the most accurate (± 0.1 °C) temperature measurement devices available. Due to their non-linearity thermistors are only suited for measurements in the 0–100 °C range.

IR Temperature sensor

Most people are somewhat familiar with infrared temperature sensing because they’ve likely seen thermal images at some point in the media — TV, movies, games. Or maybe they’ve used thermal imaging for home improvement projects.

All matter above 0K emits thermal radiation. This is a fundamental property of our universe. Thermal radiation is electromagnetic (EM) radiation. IR temperature sensors are designed to measure this thermal radiation and infer the temperature of the source object.

Theory

What is EM radiation?

Radiation is defined as energy emitted by a source as particles or waves. EM radiation is energy that takes the form of electromagnetic waves. Light and heat are EM waves. It is useful to think of EM waves as being analogous to ripples that radiate out from a stone dropped in a pond. Just as ripples exist in water, EM waves exist in the EM field. While the pond is a separate entity into which we can throw rocks and observe waves, the electromagnetic field is a FUNDAMENTAL building block of the universe. It exists everywhere from the atomic to galactic scale. So when you shine a flashlight into space, you are creating ripples in the ‘universal’ electromagnetic field that stretches across the universe.

But if everything is emitting thermal radiation all the time, why doesn’t everything cool down to 0K ? Because matter doesn’t just emit radiation it also absorbs it. Stars like the SUN constantly radiate unimaginable quantities of thermal radiation into space and any matter orbiting the sun, such as the earth and everything on it, absorbs this energy.

Temperature and IR radiation

The physics underlying the IR sensor is complex, and the relationship between the measured variable (thermal radiation) and output variable (temperature) is not linear. The fact that these complex sensors have become ubiquitous and easy to use at present is an impressive engineering achievement. This paper by Rogalski outlines the history of infrared detectors.

The fundamental principle of the IR temperature sensor is based on the Stefan-Boltzmann law which states that the power density (W/m²) of infrared radiation emitted by an object is directly proportional to the fourth power of its temperature.

For a blackbody* the law can be expressed as

P = σT⁴

- P is the power density

- σ is the Stefan-Boltzmann constant (approximately 5.67x10^-8 W/m²K⁴)

- T is the temperature of the object in Kelvin

A blackbody is an idealized object (i.e. it doesn’t exist, but is used in physics to make the math simple) that absorbs all incident IR radiation.

The IR spectrum

Objects don’t emit EM radiation at a constant wavelength, the emission spans a spectrum*. However power is not evenly distributed across the spectrum.

The solar spectrum is shown in the image below. What this graph shows is that the sun outputs EM waves of different wavelengths, and at different intensities. Intensity is power per unit area (W/m²).

\ * the spectrum is not necessarily continuous. Hydrogen for example does not emit and absorb all frequencies…physics is complex and the rabbit hole runs deep.

What’s more the thermal radiation spectrum is temperature dependent! The image below shows the the black-body spectrum at various temperatures.

As an object gets hotter, the spectrum shifts left and moves up. The wave length gets smaller (i.e. the frequency increases), and the intensity of the emitted power increase. This makes intuitive sense, because things that are hot are generally emitting light (hot metals, burning coal/wood etc.).

Putting it all together

The sensing element inside an IR temperature sensor measures the power density of IR spectral band from 8 — 14 µm which are infrared waves invisible to the human eye. It then uses a modified form of the Stefan-Boltzmann law with co-efficient for emissivity to calculate temperature.

Emissivity is defined as the ratio of the energy radiated from a material’s surface to that radiated from a perfect emitter, known as a blackbody, at the same temperature and wavelength and under the same viewing conditions. A blackbody has an emissivity of 1.

Typical emissivities:

Note than emission and absorption are equal under steady state conditions. So emissivity can also be called absorptivity. What happens to the remaining radiation that is not absorbed? It is reflected. Therefore emissivity and reflectivity must sum to 1.

Note that reflection is not simply something that occurs on the outer surface of an object. It also occurs internally, i.e. radiation emitted from the deeper layers of an object can be reflected back into the object at the surface.

So this gives a clue on how to gauge the emissivity of a surface — if it is shiny and reflective it will have low emissivity. However it is always best to check emissivity tables published on a trusted source.

Performance

A good quality commercial IR temperature sensor will have a repeatability of ±0.5°C. The accuracy of the IR temperature reading is dependent on instrument configuration (selecting the correct emissivity setting). Therefore IR temperature sensor performance is better described in terms of repeatability, which is the error in repeated measurements of the same source/object.

Conclusion

Different sensors are suited for different applications. To summarize:

- Thermocouples — Cheap. Best for measuring very high temperatures, or for use in tight spaces.

- RTD — Better accuracy, stability and linearity than thermocouples. Preferred choice of temperature sensor in industry.

- Thermistor — Most accurate temperature sensor but limited to applications under 100 °C.

- IR temperature sensor — Non-contact measurement of a wide range of temperatures.